AWSの利用料。個人で学習する場合には結構怖いですよね。

業務で使ってて毎月の請求額を知ってしまっている方であればなおさらで無料枠があるといってもいつの間にか高額な請求になってしまってたなんてことも。

EC2一つだけでコンピューティング料金だけじゃなくて、ネットワークやIPを固定化しているのであればEIPや必ず必要となるEBSなんかも利用料はかかってきます。

EC2を停止すればいいじゃんと思っても、EIPやEBSはマシンを停止してても常時課金されるのは皆さんもご存じの通りだと思います。

そんなAWSですが、ローカルで思うようにterrafromやAWS CLI AWS SDKなどでAPIを動かすことができるエミュレートソフトがあるということで触ってみました。

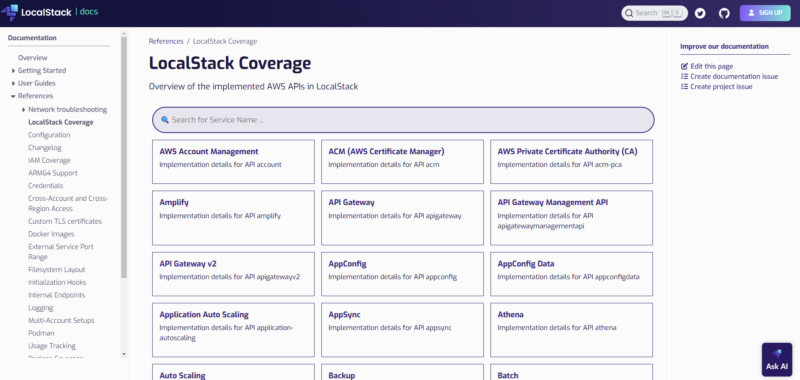

localstackで利用可能なAWSのサービスの一覧は以下の公式ページをご確認ください。

確認したところ、かなりの数のAWSのサービスをエミュレートすることができるようです。

公式サイト:https://docs.localstack.cloud/references/coverage

実行環境は以前にAnsibleを使ってDockerとterraformをインストールしたubntuとなります。

ミドルウェアのバージョンは以下となります。

ubntu@ubntu:~/terrafrom$ docker -v

Docker version 28.0.1, build 068a01eubntu@ubntu:~/terrafrom$ terraform -v

Terraform v1.11.1

on linux_amd64

+ provider registry.terraform.io/hashicorp/aws v5.89.0Dockerコンテナからlocalstackを起動

localstackをdocker上で起動

sudo docker run --rm -p 4566:4566 localstack/localstackこのコマンドを実行すると、localstackがポート4566で動作する。このポートがAWSのエミュレーションを提供する。

様々なサービスのエミューレータを取り込んでいるようだ。

ubntu@ubntu:~$ sudo docker run --rm -p 4566:4566 localstack/localstack

[sudo] ubntu のパスワード:

Unable to find image 'localstack/localstack:latest' locally

latest: Pulling from localstack/localstack

0d653a9bda6a: Pull complete

183f0922284a: Pull complete

5dbb3b698b72: Pull complete

0c5ce2cb4ecc: Pull complete

c865606d7691: Pull complete

c2bcb89268c6: Pull complete

6a1f077a2332: Pull complete

2a836eb6ab14: Pull complete

4f4fb700ef54: Pull complete

623c09021981: Pull complete

ede3144a98ac: Pull complete

f74433411e39: Pull complete

5bbce2af80ab: Pull complete

878935a06049: Pull complete

7e4b58c6bec7: Pull complete

8392d87fc1a1: Pull complete

c7273b86939d: Pull complete

28b71b943bfb: Pull complete

2a7a48a942ca: Pull complete

d2e229627b75: Pull complete

5a7711d8b189: Pull complete

fd5d33b76a50: Pull complete

ec678718ff24: Pull complete

f6d090a4bad6: Pull complete

Digest: sha256:18a52d5737512f41a455831f0c8b13bb65f9bfb621e9fbcc27a8661da9e7afd9

Status: Downloaded newer image for localstack/localstack:latest

LocalStack version: 4.2.1.dev21

LocalStack build date: 2025-03-06

LocalStack build git hash: 976046d07

Ready.問題なく動いているようだ。

terrafrom実行

terraformの設定用のtfファイル「provider.tf」とリソース作成用の「main.tf」を用意する。S3バケット「my-localstack-bucket」を一つ作るだけの簡単な構成となっている。

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 5.0"

}

}

}

provider "aws" {

region = "ap-northeast-1"

endpoints {

s3 = "http://localhost:4566" # LocalStackのS3のエンドポイントを指定

}

# LocalStackを利用するため、以下の設定を追加

skip_credentials_validation = true

skip_metadata_api_check = true

skip_requesting_account_id = true

# S3のエンドポイントを直接指定する設定を追加

s3_use_path_style = true

}resource "aws_s3_bucket" "my_bucket" {

bucket = "my-localstack-bucket"

}terraformを実行するカレントディレクトリにmain.tfとprovider.tfが存在することを確認する。

ubntu@ubntu:~/terrafrom$ ls -la main.tf provider.tf

-rw------- 1 ubntu ubntu 78 3月 6 16:22 main.tf

-rw------- 1 ubntu ubntu 567 3月 6 16:20 provider.tfterraformの実行を行う。以下のコマンドで初期化する。

terraform init実行結果

bntu@ubntu:~$ terraform init

Initializing the backend...

Initializing provider plugins...

- Finding latest version of hashicorp/aws...

- Installing hashicorp/aws v5.89.0...

- Installed hashicorp/aws v5.89.0 (signed by HashiCorp)

Terraform has created a lock file .terraform.lock.hcl to record the provider

selections it made above. Include this file in your version control repository

so that Terraform can guarantee to make the same selections by default when

you run "terraform init" in the future.

Terraform has been successfully initialized!

You may now begin working with Terraform. Try running "terraform plan" to see

any changes that are required for your infrastructure. All Terraform commands

should now work.

If you ever set or change modules or backend configuration for Terraform,

rerun this command to reinitialize your working directory. If you forget, other

commands will detect it and remind you to do so if necessary.問題なく初期化された。

次はterraform planで問題なくリソースが作られるのか確認をする。

ubntu@ubntu:~/terrafrom$ terraform plan

Terraform used the selected providers to generate the following execution plan.

Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# aws_s3_bucket.my_bucket will be created

+ resource "aws_s3_bucket" "my_bucket" {

+ acceleration_status = (known after apply)

+ acl = (known after apply)

+ arn = (known after apply)

+ bucket = "my-localstack-bucket"

+ bucket_domain_name = (known after apply)

+ bucket_prefix = (known after apply)

+ bucket_regional_domain_name = (known after apply)

+ force_destroy = false

+ hosted_zone_id = (known after apply)

+ id = (known after apply)

+ object_lock_enabled = (known after apply)

+ policy = (known after apply)

+ region = (known after apply)

+ request_payer = (known after apply)

+ tags_all = (known after apply)

+ website_domain = (known after apply)

+ website_endpoint = (known after apply)

+ cors_rule (known after apply)

+ grant (known after apply)

+ lifecycle_rule (known after apply)

+ logging (known after apply)

+ object_lock_configuration (known after apply)

+ replication_configuration (known after apply)

+ server_side_encryption_configuration (known after apply)

+ versioning (known after apply)

+ website (known after apply)

}

Plan: 1 to add, 0 to change, 0 to destroy.バケット名のみ指定してて他は特に指定していないのでデフォルトの設定で作られるという結果が返ってきた。

想定通りだ。

次は実際にリソースの作成を行う為terrafrom applyを実行する。

ubntu@ubntu:~/terrafrom$ terraform apply

Terraform used the selected providers to generate the following execution plan.

Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# aws_s3_bucket.my_bucket will be created

+ resource "aws_s3_bucket" "my_bucket" {

+ acceleration_status = (known after apply)

+ acl = (known after apply)

+ arn = (known after apply)

+ bucket = "my-localstack-bucket"

+ bucket_domain_name = (known after apply)

+ bucket_prefix = (known after apply)

+ bucket_regional_domain_name = (known after apply)

+ force_destroy = false

+ hosted_zone_id = (known after apply)

+ id = (known after apply)

+ object_lock_enabled = (known after apply)

+ policy = (known after apply)

+ region = (known after apply)

+ request_payer = (known after apply)

+ tags_all = (known after apply)

+ website_domain = (known after apply)

+ website_endpoint = (known after apply)

+ cors_rule (known after apply)

+ grant (known after apply)

+ lifecycle_rule (known after apply)

+ logging (known after apply)

+ object_lock_configuration (known after apply)

+ replication_configuration (known after apply)

+ server_side_encryption_configuration (known after apply)

+ versioning (known after apply)

+ website (known after apply)

}

Plan: 1 to add, 0 to change, 0 to destroy.

Do you want to perform these actions?

Terraform will perform the actions described above.

Only 'yes' will be accepted to approve.

Enter a value: yes

aws_s3_bucket.my_bucket: Creating...

aws_s3_bucket.my_bucket: Creation complete after 1s [id=my-localstack-bucket]

Apply complete! Resources: 1 added, 0 changed, 0 destroyed.問題なく作成が行われた。

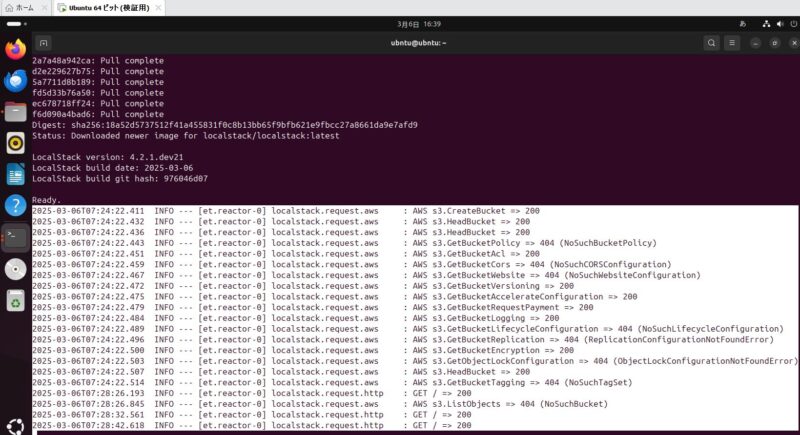

DockerのログにもS3に関わるlocalstackのログが出ていた。

2025-03-06T07:24:22.411 INFO --- [et.reactor-0] localstack.request.aws : AWS s3.CreateBucket => 200

2025-03-06T07:24:22.432 INFO --- [et.reactor-0] localstack.request.aws : AWS s3.HeadBucket => 200

2025-03-06T07:24:22.436 INFO --- [et.reactor-0] localstack.request.aws : AWS s3.HeadBucket => 200

2025-03-06T07:24:22.443 INFO --- [et.reactor-0] localstack.request.aws : AWS s3.GetBucketPolicy => 404 (NoSuchBucketPolicy)

2025-03-06T07:24:22.451 INFO --- [et.reactor-0] localstack.request.aws : AWS s3.GetBucketAcl => 200

2025-03-06T07:24:22.459 INFO --- [et.reactor-0] localstack.request.aws : AWS s3.GetBucketCors => 404 (NoSuchCORSConfiguration)

2025-03-06T07:24:22.467 INFO --- [et.reactor-0] localstack.request.aws : AWS s3.GetBucketWebsite => 404 (NoSuchWebsiteConfiguration)

2025-03-06T07:24:22.472 INFO --- [et.reactor-0] localstack.request.aws : AWS s3.GetBucketVersioning => 200

2025-03-06T07:24:22.475 INFO --- [et.reactor-0] localstack.request.aws : AWS s3.GetBucketAccelerateConfiguration => 200

2025-03-06T07:24:22.479 INFO --- [et.reactor-0] localstack.request.aws : AWS s3.GetBucketRequestPayment => 200

2025-03-06T07:24:22.484 INFO --- [et.reactor-0] localstack.request.aws : AWS s3.GetBucketLogging => 200

2025-03-06T07:24:22.489 INFO --- [et.reactor-0] localstack.request.aws : AWS s3.GetBucketLifecycleConfiguration => 404 (NoSuchLifecycleConfiguration)

2025-03-06T07:24:22.496 INFO --- [et.reactor-0] localstack.request.aws : AWS s3.GetBucketReplication => 404 (ReplicationConfigurationNotFoundError)

2025-03-06T07:24:22.500 INFO --- [et.reactor-0] localstack.request.aws : AWS s3.GetBucketEncryption => 200

2025-03-06T07:24:22.503 INFO --- [et.reactor-0] localstack.request.aws : AWS s3.GetObjectLockConfiguration => 404 (ObjectLockConfigurationNotFoundError)

2025-03-06T07:24:22.507 INFO --- [et.reactor-0] localstack.request.aws : AWS s3.HeadBucket => 200

2025-03-06T07:24:22.514 INFO --- [et.reactor-0] localstack.request.aws : AWS s3.GetBucketTagging => 404 (NoSuchTagSet)

2025-03-06T07:28:26.193 INFO --- [et.reactor-0] localstack.request.http : GET / => 200

2025-03-06T07:28:26.845 INFO --- [et.reactor-0] localstack.request.aws : AWS s3.ListObjects => 404 (NoSuchBucket)

2025-03-06T07:28:32.561 INFO --- [et.reactor-0] localstack.request.http : GET / => 200

2025-03-06T07:28:42.618 INFO --- [et.reactor-0] localstack.request.http : GET / => 200

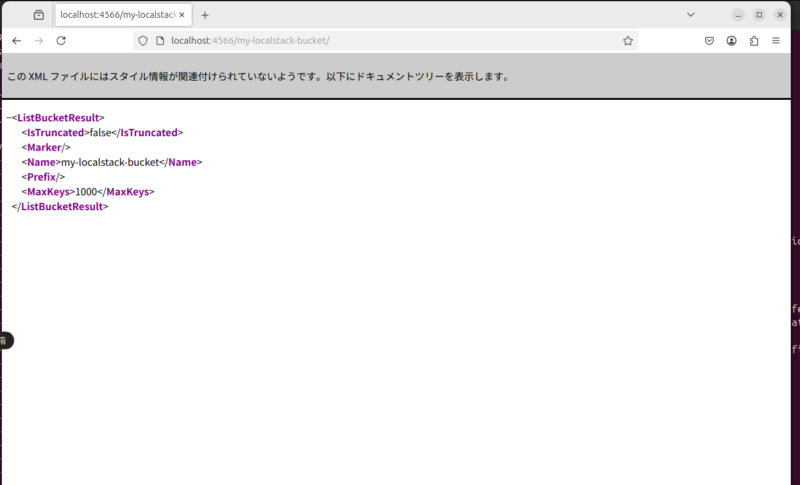

S3の情報の取得をしてみる。

ubntu@ubntu:~/terrafrom$ curl -X GET "http://localhost:4566/my-localstack-bucket/"

<?xml version='1.0' encoding='utf-8'?>

<ListBucketResult xmlns="http://s3.amazonaws.com/doc/2006-03-01/"><IsTruncated>false</IsTruncated><Marker /><Name>my-localstack-bucket</Name><Prefix /><MaxKeys>1000</MaxKeys></ListBucketResult>もしくはブラウザでもアクセス可能

AWS CLIをubntuにインストール

AWS CLIコマンドのほうがいろいろAPIを動かすことができるのでubntuにAWS CLIをインストールする。

pythonの仮想環境

仮想環境をを使うことでシステム全体に影響を与えず必要なパッケージをインストールすることができる。

# 必要なパッケージをインストール

sudo apt install python3-venv python3-dev

# 仮想環境を作成

python3 -m venv ~/awscli-env

# 仮想環境をアクティブにする

source ~/awscli-env/bin/activate

# AWS CLIをインストール

pip install awscli

# インストール確認

aws --version以下のコマンドで仮想環境を無効化する。

deactivate通常のインストールであればAWS公式ドキュメントを確認

https://docs.aws.amazon.com/ja_jp/cli/latest/userguide/getting-started-install.html

インストールが完了したのでバージョンを確認する。

(awscli-env)という仮想環境で実行していることがわかる。

(awscli-env) ubntu@ubntu:~/terrafrom$ aws --version

aws-cli/1.38.7 Python/3.12.3 Linux/6.8.0-55-generic botocore/1.37.7AWS CLIの初期化を行う

(awscli-env) ubntu@ubntu:~/terrafrom$ aws configure

AWS Access Key ID [None]: test

AWS Secret Access Key [None]: test

Default region name [None]: ap-northeast-1

Default output format [None]: json仮想環境を使うべきポイント使わないべきポイント

仮想環境を使うべきポイント:

- 依存関係の管理:

- プロジェクトごとに異なるパッケージバージョンが必要な場合に便利。システム全体のPython環境を汚すことなく、個別にライブラリやツールを管理できるシステムの安定性確保:

- システム全体で使うパッケージのバージョンを固定できるので、他のプロジェクトやシステム環境に干渉せずに作業できる 。

- 開発環境と本番環境で依存関係が一致するため、動作が一貫して再現される。特にチーム開発やCI/CDで有効 。

- プロジェクト専用の仮想環境でインストールを行うことで、他のプロジェクトに影響を与えず、クリーンな状態で作業できる

仮想環境を使わないべきポイントツールやスクリプトのみ使用する場合**:

- 特に依存関係が少ないツールやスクリプトだけを使う場合、仮想環境を作る必要はなく、システムに直接インストールする方が簡便 。

- システム全体で使いたいツールがある場合: 同じツールを使用し続ける予定があり、管理がシンプルである方が良い場合は、仮想環境を使わない選択肢もある 。

- 仮想環境の管理が煩雑な場合:仮想環境の設定や管理が必要でールやライブラリがインストールされているシステム環境があり、それらの管理が容易な場合は仮想環境の使用を避けることができる 。

AWS CLIでS3のAPIを操作する。

S3の存在確認を以下のコマンドで行いterraformで作成したリソース「my-localstack-bucket」が存在することが確認できた。

(awscli-env) ubntu@ubntu:~/terrafrom$ aws --endpoint-url=http://localhost:4566 s3 ls

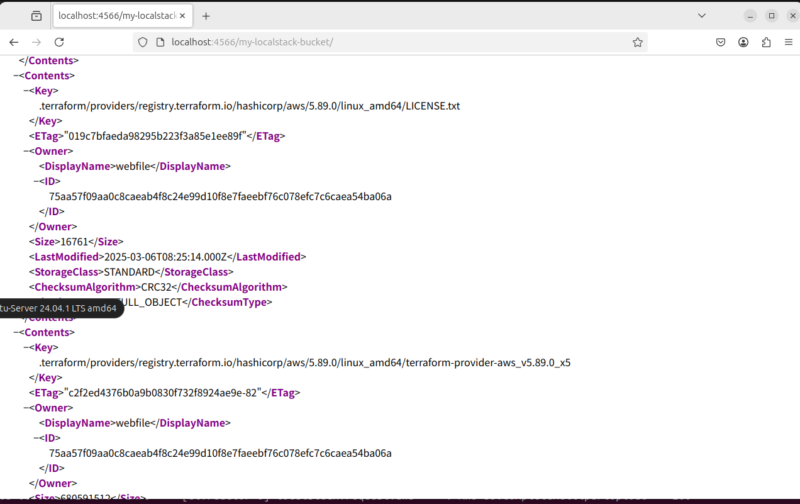

2025-03-06 16:24:22 my-localstack-buckets3のsyncコマンドを使って実行しているカレントディレクトリの情報と同期してみる。

terraformで生成されたファイル含めてアップロードされたようだ。

(awscli-env) ubntu@ubntu:~/terrafrom$ aws --endpoint-url=http://localhost:4566 s3 sync . s3://my-localstack-bucket

upload: ./.terraform.lock.hcl to s3://my-localstack-bucket/.terraform.lock.hcl

upload: .terraform/providers/registry.terraform.io/hashicorp/aws/5.89.0/linux_amd64/LICENSE.txt to s3://my-localstack-bucket/.terraform/providers/registry.terraform.io/hashicorp/aws/5.89.0/linux_amd64/LICENSE.txt

upload: ./terraform.tfstate to s3://my-localstack-bucket/terraform.tfstate

upload: ./main.tf to s3://my-localstack-bucket/main.tf

upload: ./provider.tf to s3://my-localstack-bucket/provider.tf

upload: .terraform/providers/registry.terraform.io/hashicorp/aws/5.89.0/linux_amd64/terraform-provider-aws_v5.89.0_x5 to s3://my-localstack-bucket/.terraform/providers/registry.terraform.io/hashicorp/aws/5.89.0/linux_amd64/terraform-provider-aws_v5.89.0_x5ブラウザでアクセスしてみたところxmlにファイルのオブジェクト情報が入っているのでS3のAPIも問題なく操作ができていることがわかる。

XML

(awscli-env) ubntu@ubntu:~/terrafrom$ curl -X GET "http://localhost:4566/my-localstack-bucket/"

<?xml version="1.0" encoding="utf-8"?>

<ListBucketResult xmlns="http://s3.amazonaws.com/doc/2006-03-01/">

<IsTruncated>false</IsTruncated>

<Marker />

<Name>my-localstack-bucket</Name>

<Prefix />

<MaxKeys>1000</MaxKeys>

<Contents>

<Key>.terraform.lock.hcl</Key>

<ETag>"1d16046866de7f4ca3eaa217d097250b"</ETag>

<Owner>

<DisplayName>webfile</DisplayName>

<ID>75aa57f09aa0c8caeab4f8c24e99d10f8e7faeebf76c078efc7c6caea54ba06a</ID>

</Owner>

<Size>1406</Size>

<LastModified>2025-03-06T08:25:14.000Z</LastModified>

<StorageClass>STANDARD</StorageClass>

<ChecksumAlgorithm>CRC32</ChecksumAlgorithm>

<ChecksumType>FULL_OBJECT</ChecksumType>

</Contents>

<Contents>

<Key>.terraform/providers/registry.terraform.io/hashicorp/aws/5.89.0/linux_amd64/LICENSE.txt</Key>

<ETag>"019c7bfaeda98295b223f3a85e1ee89f"</ETag>

<Owner>

<DisplayName>webfile</DisplayName>

<ID>75aa57f09aa0c8caeab4f8c24e99d10f8e7faeebf76c078efc7c6caea54ba06a</ID>

</Owner>

<Size>16761</Size>

<LastModified>2025-03-06T08:25:14.000Z</LastModified>

<StorageClass>STANDARD</StorageClass>

<ChecksumAlgorithm>CRC32</ChecksumAlgorithm>

<ChecksumType>FULL_OBJECT</ChecksumType>

</Contents>

<Contents>

<Key>.terraform/providers/registry.terraform.io/hashicorp/aws/5.89.0/linux_amd64/terraform-provider-aws_v5.89.0_x5</Key>

<ETag>"c2f2ed4376b0a9b0830f732f8924ae9e-82"</ETag>

<Owner>

<DisplayName>webfile</DisplayName>

<ID>75aa57f09aa0c8caeab4f8c24e99d10f8e7faeebf76c078efc7c6caea54ba06a</ID>

</Owner>

<Size>680591512</Size>

<LastModified>2025-03-06T08:25:14.000Z</LastModified>

<StorageClass>STANDARD</StorageClass>

<ChecksumAlgorithm>CRC32</ChecksumAlgorithm>

<ChecksumType>COMPOSITE</ChecksumType>

</Contents>

<Contents>

<Key>main.tf</Key>

<ETag>"95cf9d1a1698c8eec937e6d9ab575826"</ETag>

<Owner>

<DisplayName>webfile</DisplayName>

<ID>75aa57f09aa0c8caeab4f8c24e99d10f8e7faeebf76c078efc7c6caea54ba06a</ID>

</Owner>

<Size>78</Size>

<LastModified>2025-03-06T08:25:14.000Z</LastModified>

<StorageClass>STANDARD</StorageClass>

<ChecksumAlgorithm>CRC32</ChecksumAlgorithm>

<ChecksumType>FULL_OBJECT</ChecksumType>

</Contents>

<Contents>

<Key>provider.tf</Key>

<ETag>"b99d94ff0822d5a3372c70e154487684"</ETag>

<Owner>

<DisplayName>webfile</DisplayName>

<ID>75aa57f09aa0c8caeab4f8c24e99d10f8e7faeebf76c078efc7c6caea54ba06a</ID>

</Owner>

<Size>567</Size>

<LastModified>2025-03-06T08:25:14.000Z</LastModified>

<StorageClass>STANDARD</StorageClass>

<ChecksumAlgorithm>CRC32</ChecksumAlgorithm>

<ChecksumType>FULL_OBJECT</ChecksumType>

</Contents>

<Contents>

<Key>terraform.tfstate</Key>

<ETag>"fee9dc987d966da682bcd0e5438bcf37"</ETag>

<Owner>

<DisplayName>webfile</DisplayName>

<ID>75aa57f09aa0c8caeab4f8c24e99d10f8e7faeebf76c078efc7c6caea54ba06a</ID>

</Owner>

<Size>2647</Size>

<LastModified>2025-03-06T08:25:14.000Z</LastModified>

<StorageClass>STANDARD</StorageClass>

<ChecksumAlgorithm>CRC32</ChecksumAlgorithm>

<ChecksumType>FULL_OBJECT</ChecksumType>

</Contents>

</ListBucketResult>Dockerのログも確認してみる。

ファイル数のわりにPutObjectが多いのはマルチパートアップロードがされているからってことなんだろう。(AWS s3.CreateMultipartUpload => 200)

2025-03-06T08:25:14.845 INFO --- [et.reactor-0] localstack.request.aws : AWS s3.CreateMultipartUpload => 200

2025-03-06T08:25:14.854 INFO --- [et.reactor-2] localstack.request.aws : AWS s3.PutObject => 200

2025-03-06T08:25:14.856 INFO --- [et.reactor-1] localstack.request.aws : AWS s3.PutObject => 200

2025-03-06T08:25:14.937 INFO --- [et.reactor-2] localstack.request.aws : AWS s3.PutObject => 200

2025-03-06T08:25:14.944 INFO --- [et.reactor-1] localstack.request.aws : AWS s3.PutObject => 200

2025-03-06T08:25:14.955 INFO --- [et.reactor-0] localstack.request.aws : AWS s3.PutObject => 200

2025-03-06T08:25:15.779 INFO --- [et.reactor-2] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:15.909 INFO --- [et.reactor-1] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:16.066 INFO --- [et.reactor-0] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:16.296 INFO --- [et.reactor-2] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:16.403 INFO --- [et.reactor-1] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:16.501 INFO --- [et.reactor-0] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:16.646 INFO --- [et.reactor-2] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:16.682 INFO --- [et.reactor-1] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:16.710 INFO --- [et.reactor-0] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:16.792 INFO --- [et.reactor-3] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:16.934 INFO --- [et.reactor-2] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:17.037 INFO --- [et.reactor-3] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:17.241 INFO --- [et.reactor-1] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:17.449 INFO --- [et.reactor-0] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:17.633 INFO --- [et.reactor-2] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:17.756 INFO --- [et.reactor-3] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:17.902 INFO --- [et.reactor-0] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:17.904 INFO --- [et.reactor-1] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:18.199 INFO --- [et.reactor-3] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:18.227 INFO --- [et.reactor-1] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:18.316 INFO --- [et.reactor-2] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:18.355 INFO --- [et.reactor-0] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:18.392 INFO --- [et.reactor-4] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:18.418 INFO --- [et.reactor-1] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:18.522 INFO --- [et.reactor-3] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:18.690 INFO --- [et.reactor-1] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:18.714 INFO --- [et.reactor-2] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:18.855 INFO --- [et.reactor-0] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:19.162 INFO --- [et.reactor-4] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:19.429 INFO --- [et.reactor-3] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:19.470 INFO --- [et.reactor-1] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:19.574 INFO --- [et.reactor-2] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:19.656 INFO --- [et.reactor-0] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:19.752 INFO --- [et.reactor-4] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:19.877 INFO --- [et.reactor-3] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:20.029 INFO --- [et.reactor-0] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:20.040 INFO --- [et.reactor-2] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:20.042 INFO --- [et.reactor-1] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:20.212 INFO --- [et.reactor-4] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:20.425 INFO --- [et.reactor-3] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:20.487 INFO --- [et.reactor-1] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:20.827 INFO --- [et.reactor-0] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:20.871 INFO --- [et.reactor-4] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:20.903 INFO --- [et.reactor-2] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:20.959 INFO --- [et.reactor-1] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:20.962 INFO --- [et.reactor-3] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:21.366 INFO --- [et.reactor-0] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:21.432 INFO --- [et.reactor-2] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:21.449 INFO --- [et.reactor-4] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:21.495 INFO --- [et.reactor-1] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:21.519 INFO --- [et.reactor-3] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:21.689 INFO --- [et.reactor-2] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:21.822 INFO --- [et.reactor-4] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:21.830 INFO --- [et.reactor-0] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:21.923 INFO --- [et.reactor-3] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:22.059 INFO --- [et.reactor-1] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:22.387 INFO --- [et.reactor-2] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:22.482 INFO --- [et.reactor-0] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:22.493 INFO --- [et.reactor-4] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:22.668 INFO --- [et.reactor-3] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:22.738 INFO --- [et.reactor-1] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:22.865 INFO --- [et.reactor-0] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:22.927 INFO --- [et.reactor-2] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:23.040 INFO --- [et.reactor-4] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:23.089 INFO --- [et.reactor-1] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:23.178 INFO --- [et.reactor-3] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:23.425 INFO --- [et.reactor-2] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:23.426 INFO --- [et.reactor-0] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:23.540 INFO --- [et.reactor-3] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:23.554 INFO --- [et.reactor-4] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:23.706 INFO --- [et.reactor-2] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:23.711 INFO --- [et.reactor-1] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:23.981 INFO --- [et.reactor-0] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:24.017 INFO --- [et.reactor-3] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:24.090 INFO --- [et.reactor-4] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:24.105 INFO --- [et.reactor-1] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:24.197 INFO --- [et.reactor-2] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:24.513 INFO --- [et.reactor-0] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:24.569 INFO --- [et.reactor-4] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:24.614 INFO --- [et.reactor-3] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:24.636 INFO --- [et.reactor-1] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:24.668 INFO --- [et.reactor-2] localstack.request.aws : AWS s3.UploadPart => 200

2025-03-06T08:25:26.879 INFO --- [et.reactor-0] localstack.request.aws : AWS s3.CompleteMultipartUpload => 200

2025-03-06T08:28:02.188 INFO --- [et.reactor-3] localstack.request.aws : AWS s3.ListObjects => 200

2025-03-06T08:31:01.755 INFO --- [et.reactor-1] localstack.request.aws : AWS s3.ListObjects => 200あとがき

localstackを知るまでは実際のAWS環境でしかAWSのAPIを動かすことができないと思っていましたが、簡単に仮想環境でエミュレートして使うことができるようになったのは、AWSを学習する方にとってかなり良いツールなのではないかと感じました。利用可能なサービスは限られているようですが、今後も拡張されるととてもありがたいですね。terraformやAWS CLIも利用することができるので本番環境実行前のIACの挙動の確認やテストにも使えて利用可能なサービスについては実務でも有効じゃないかと思いました。コンテナやIACでAWSのサンドボックス環境として使えるので新人のクラウドエンジニアの学習にもよさそうです。

この記事がlocalstackを利用される方の参考になれば幸いです。